The transmediale 2019 was a festival with out a topic. The organizers wanted to leave it open this year avoiding to give a specific direction or tone for emerging thoughts and content. The theme of the festival program was built around the question of: What moves you? Emotions and feelings were examined in talks, workshops and performances to open up discussion about the affective dimensions of digital culture today.

Of course it is impossible to attend all of the program, so I console my self with the knowledge that part of the program I missed i can usually catch later on transmediales YouTube channel where they publish most of the talks and panels.

Following some of my transmediale 2019 highlights:

Workshop(s)

I had the chance to get to Berlin a bit earlier to attend Adam Harvey’s (VFRAME) and Jeff Deutch (Syrian Archive) workshop Visual Evidence: Methods and Tools for Human Rights investigation. The workshop centered around the research and development of tools to manage a huge amount of video material from conflict areas, specifically Syria. The Syrian Archive collects material intending to documented and archive evidence for possible future use in trying to hold war criminals accountable for their actions. The challenge for human rights activists working with footage from Syria is that there is a massive amount of material. Manually sorting out the relevant videos for archiving is just requiring too much time. To tackle this challenge the Syrian Archive started a collaboration with artist Adam Harvey to develop computer vision tools aiding the process of finding relevant material among hours of footage. Videos often filmed by non professionals in violent, often life threatening, situations (a very specific video aesthetic which is relevant when training object recognition).

After learning about the archiving challenges of human right activists Adam took us trough the process of developing VFRAME (Visual Forensics and Metadata Extraction) which is a collection of open source computer vision tools aiming to aid human rights investigations dealing with unmanageable amounts of video footage. The first tool developed was simply per-processing frames for visual query. A video was rendered to one image showing a scene selection. This helped the activist to see the different scenes of a video in one gaze enabling them to process the information of a several minute long video in just 10 seconds. Now the workflow was much faster, yet it would still take years to process all of the video footage. What the activist were looking for in the videos was evidence of human right abuses, children rights abuses, and also identifying illegal weapons and ammunition. To automatize some of the work load, as a first step, Adam and the Syrian Archive has started an object recognition training of a neural network to identify weapons and ammunition. Adam used the example of A0-2.5RT cluster munition as an example to talk about the challenges they had.

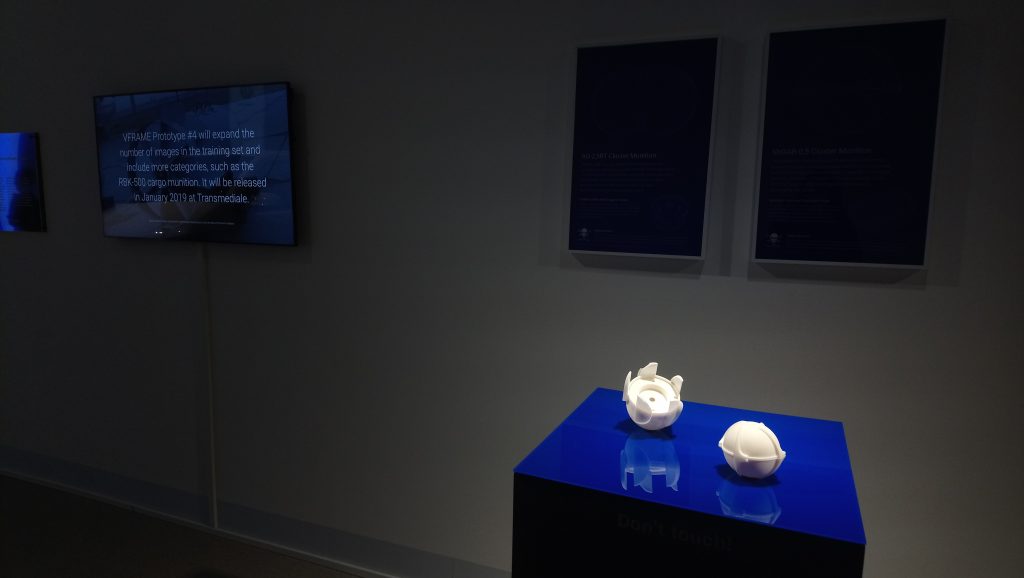

Adam showed us a tool that they have been using for annotating objects, but it has been time consuming and a greater challenge was not having enough images to actually train the network. While working with the filmed footage the activist had learned to see patterns how the object (in this case A0-2.5RT) was appearing ( eg. environments, light conditions, filming angel etc.). Hence one successful solution was to synthesize data, in other words to produced 3D renderings of the ammunition simulating the aesthetics of the documented videos. The 3D renderings with various light conditions, camera lenses, filming angels provides the neural network with additional data for training. Adam also showed experiments with 3D prints of the A0-2.5RT, but according to him it is way more effective to use the photorealistic 3D renderings.

In a panel discussion later during the conference (#26 Building Archives of Evidence and Collective Resistance ) Adam was asked how he felt about developing tools that could possible be missed used. From Adams perspective he was actually appropriating tools that are already misused. VFRAME provides a different perspective for use of machine vision in a very specific context. During the Q&A the issue of bias data sets was questioned. With the context of this case study Adam made it clear that bias actually needs to be included in the search of something very specific. For him the training images needs to capture the variations of a very specific moment, for him bias included e.g. the camera type that is often used (phone), height of the person filming (angel) the environment where the ammunition is often found (sometimes on the ground, or someone holding it in their hands etc.). When trying to detect a very specific object in a very specific type of (video) material, then bias is actually a good thing. Both during the panel and in the workshop it was made clear that the processing large amounts of relevant video material and talking with the people capturing the material on site was very valuable when creating the synthesize 3D footage to train object recognition.

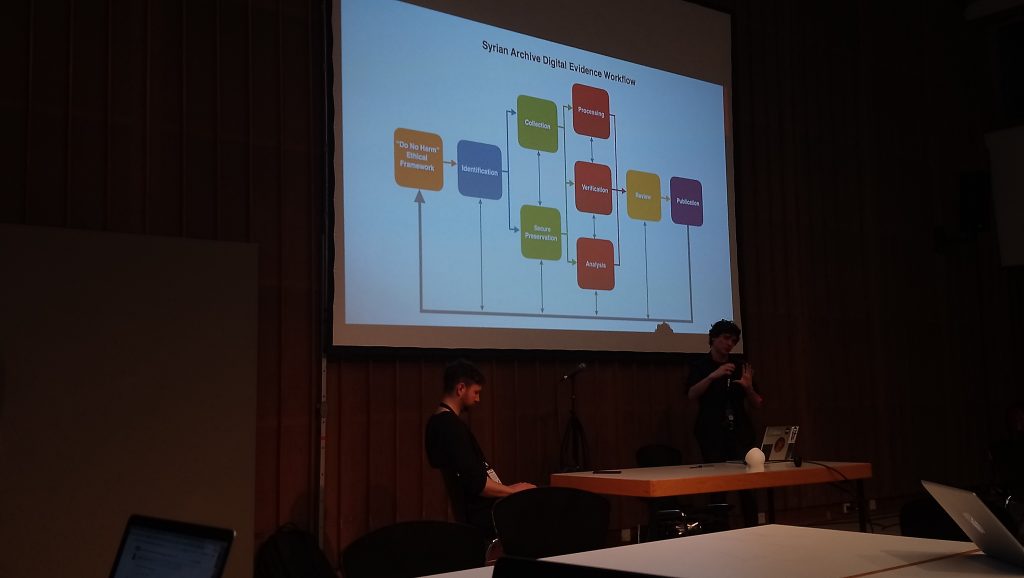

After Adams presentation of the VFRAME tools the workshop continued with Jeff Deutch taking us through processes of verifying the footage. Whereas machine learning is developed to flag relevant material for the activists, a important part of the labor is still manually done by humans. One of the important tasks is to connect the material together validating the date so it can be used as evidence. Jeff us a couple of examples how counter narratives to state news was confirmed by using various OSINT(Open source intelligence) tools such as revers image search (google, tincan), finding Geo-location (twitter, comparing objects and satellite images from Digital Globe), verifying time (unix time stamp), extracting metadata (e.g. Amnesty’s Youtube DataViewer), and collaboration with aircraft spot organizations.

The workshop and the panel were extremely informative in terms of understanding workflows and how machine vision can be used in contexts outside surveillance capitalism. The workshop had also a hands on part in which we were to test some of the VFRAME tools. Unfortunately the afternoon workshop was way to short for this and some debugging and set up issues delayed us enough to be kicked out from the workshop space before we could get hands on trying the tools.

After the transmediale I had the chance to visit the Ars Electronica Export exhibition at DRIVE – Volkswagen Group Forum. There the VFRAME was exhibited among other artworks. It is definitely one of these projects which mixes artistic practice with research and activism emphasizing the relationship between arts and politics. Another transmediale event that related to the workshop and panel mentioned earlier was a very emotional # 31 book launch of Donatella Della Ratta’s Shooting a Revolution. Visual Media and Warfare in Syria. In the discussion there was several links between her ethnographic study and the work of the Syrian Archive.

Talks

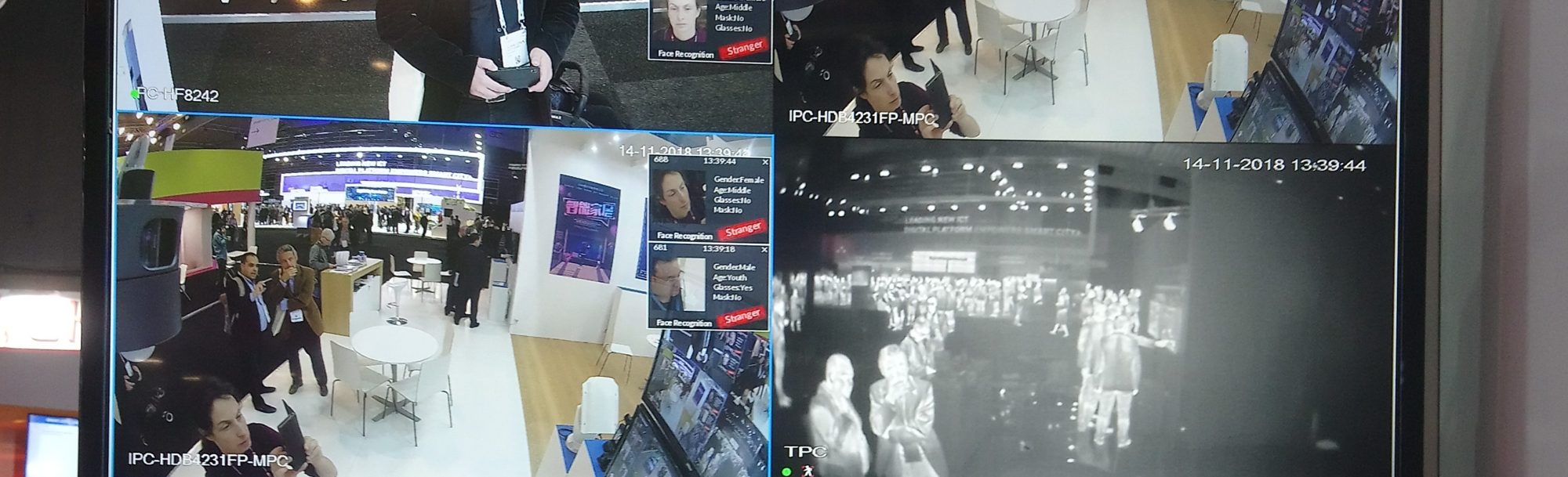

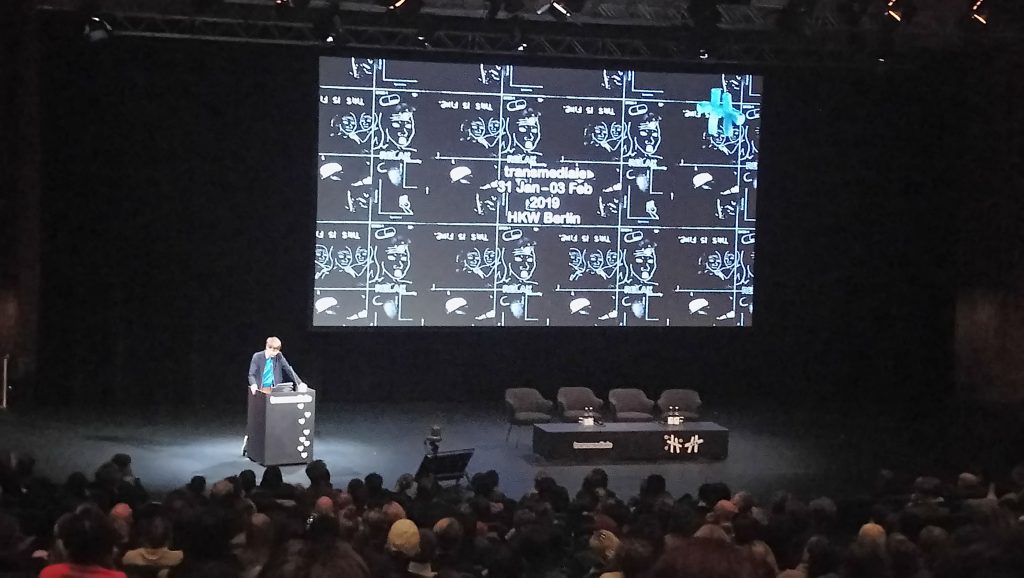

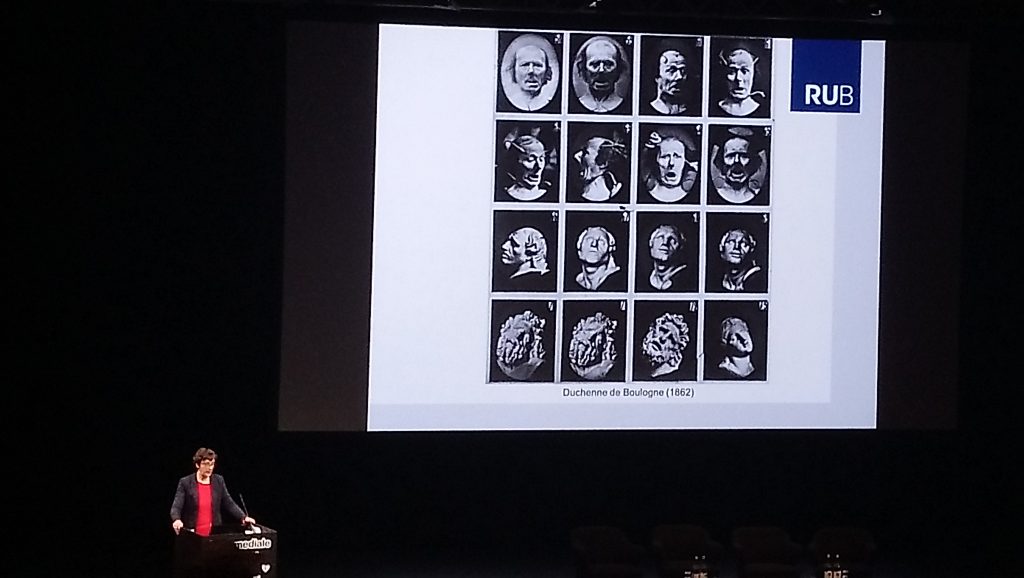

In the #01 Structures of Feeling- transmediale Opening there was some interesting references to machine vision. New York based artist Hanna Davis was presenting her current work generating emotional landscapes experimenting with generative adversarial networks and variational autoencoders. Basically the landscapes (e.g. mountains or forests) was tagged with emotions (anger, anticipation, trust, disgust, sadness, fear, joy, and surprise or ‘none’) buy Crowdflower platform workers (similar to Amazon Mechanical Turk). Then machine learning algorithms were feed with the data set to generate “angry forests” or “sad mountains” etc. The 20 minute talk was definitely a teaser to look more closely into Hannah’s work. Next up was Anna Tuschling who mentioned a number of interesting examples. With a background in psychology she talked how we have tried to understand and represent emotions coupling e.g. neurologist Duchenne de Boulogne’s work in the 1800s with facial recognition technology and applications such as Affectiva (“AFFECTIVA HUMAN PERCEPTION AI UNDERSTANDS ALL THINGS HUMAN – 7,462,713 Faces Analyzed”) and Alibaba Group’s AliPay’s ‘Smile to Pay’ campaign in China.

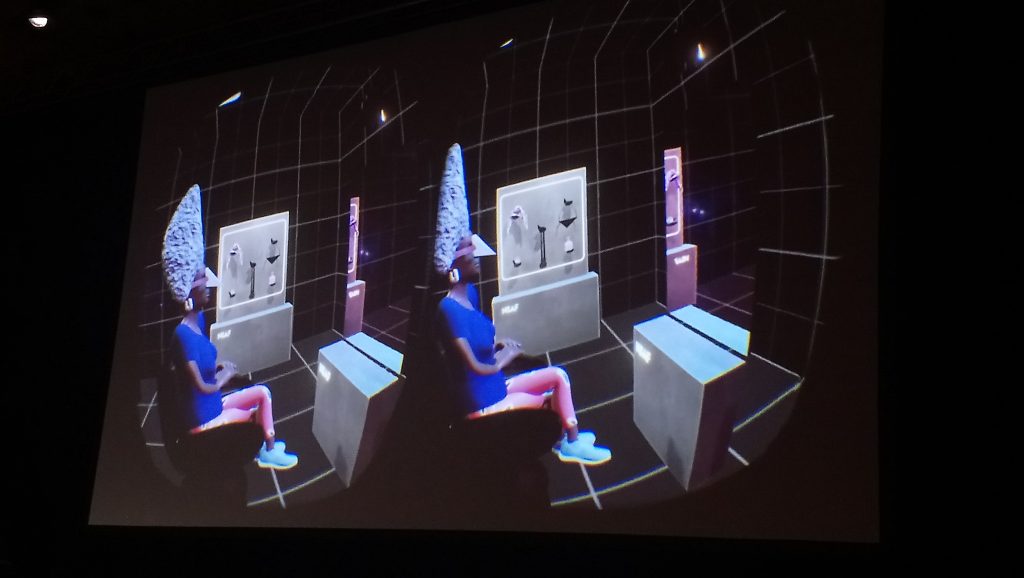

From the #12 Living Networks Talk, Asia Bazdyrieva’s & Solveig Susse’s Geocinema awoke my interest. They considers machine vision technology such as surveillance cameras, satellite images an cell phones together with geosensors an cinematic apparatus sensing fragments of the earth. The # 15 Reworking the Brain Panel with Hyphen-Labs and Tony D Sampson was not quite what I had expected, yet Sampson’s presentation connected with the readings we done on the non-conscious (N. Katherine Hayles, Unthought). He reflected on how brain research has started to effect experience capitalism (UX Industry) asking “What can be done to a brain?” and “What can a brain do?”. In the Q&A Sampson revealed a current interest in the non-conscious states of the brain while sleep walking which I found intriguing.

In my opinion one of the best panels was #25 Algorithmic Intimacies with !Mediengruppe Bitnik and Joanna Moll, moderated by Taina Bucher. The panel discussed the deepening relationship between humans and machines and how it is mediated by algorithms, apps and platforms. The cohabitation with devices we are dependent on was discussed through examples of the artists works. !Mediengruppe Bitnik presented three of their works Random Darknet Shopper, Ashley Madison Angels and Alexiety. All of the works asked important questions about intimacy, privacy, trust and responsibility. The Ashley Madison Angels work bridged well with Joanna Molls work the Dating Brokers illustrating how our most intimate data (including profile pictures and other images) are shared among dating platforms or sold forward capitalizing on our loneliness. Ashley Madison is a big dating platform that is specially marketed to people who feel lonely in their current relationship (marriage), so it encourages adultery. In 2015 the Impact Team hacked their site, while the company did not care too much about the privacy of their customers, the hackers dumped the breach making it available for everyone. The dump was large containing a huge amount of profiles, and also source code and algorithms. It became an unique chance for journalist and researchers to understand how such services are constructed. Among others !Mediengruppe Bitnik was curios to understand how our love life is orchestrated by algorithms. What was discovered from the breach was an imbalance between male and female profiles. The service lacked female profiles and due to this they had installed 17.000 chat bots. !Mediengruppe Bitnik thought that there would be amazing AI developments in creating these bots. They were to have conversations with clients from different cultures, in various languages about a number of topics. But it turned out to be very basic chat bots, with 4-5 A4 pages of erotic toned hook up lines. A well choreographed flirting was enough to keep up the conversation and the client paying for chat time. In the Ashley Madison Angels video installation the pick up lines are read by avatars wearing black “Eyes Wide Shut” type of venetian masks. After the talk I asked Carmen ( !Mediengruppe Bitnik ) about the masks. She told me that they were a feature provided by the service, as a playful joke to add on your profile image. Actually all the bots profile images were masked with the feature so that the profile images could not be run through e.g. googles revers image search to confirm abuse of profile images. In the end the chat bots were using 17000 stolen, maybe slightly altered id’s of existing people. Carmen also noted that the masks would not work anymore whereas google can now recognize a images as a duplicate using just parts of the image/face. In connection to the Ashley Madison bots also the army of human CHAPTA solvers was mentioned in the talk. There is a effective business model exploiting cheap labor to solve CHAPTAS for bots almost in real time.

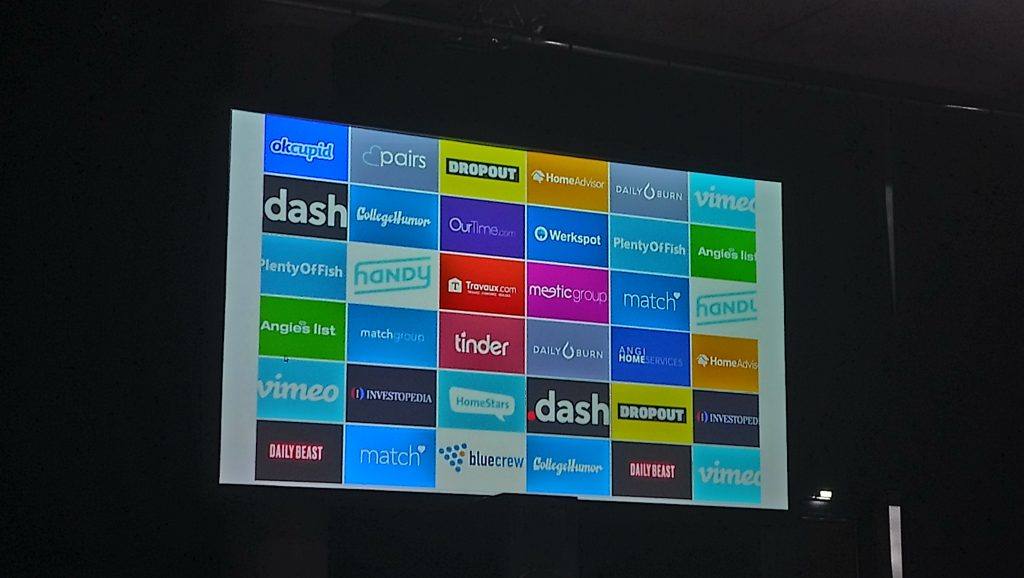

Joanna Moll continued talking about the “dating business”. Together with the Tactical Tech Collective she has researched in how dating profile data is shared and sold by data brokers. For her work Dating Brokers she bought one million profiles for 136€. These profiles (partly anonymised) can be browsed through using the interface she created. Additionally a extensive report on the research part of the project can be read in The Dating Brokers: An autopsy of online love. The report describes common practices of so called White Label Dating. While no one wants to be the first person registering onto a dating platform there is a common practice to either share data among groups of companies. When agreeing to the user terms ones profile can be shared among “partners” that can reach up to 700 companies having legal access to the data. Additionally the profiles are sold in bundles like the one million profiles Joanna bought from the dating service Plenty of Fish. The data set included about 5 million images, and I would not wonder if these images end up fed into neural networks hungry for faces to recognize.

There was several interesting talks about the commons, machine learning, affect and other topics, yet the talks described here more or less relate to my research.

Exhibitions

Somewhat disappointed I had to realize that there was no transmediale exhibition exhibition this year. As a part of the Partner program there was an related exhibition Creating Empathy at Spectrum Project space, but due to unpractical opening times I was not able to make it there. Transmediales partner festival CTM 2019 hosted an exhibition Persisting Realities which I was able to visit. I was exited to see Helena Nikonole’s deus X mchn that uses after reading about the work in her essay in the book Internet of Other People’s Things (edited by me and Andreas Zingerle). Therefor I was a bit disappointed in the presentation of her work with partly dysfunctional screens and media players. The work uses both surveillance cameras and AI making us aware of being watched. Some of the other works awoke my curiosity, yet over all the exhibition left me unemotional. My exhibition experience was saved from being a total flop by the Ars Electronica Export exhibition which was outside the transmediale program and therefore you can read more about it here.